After my last post on parallelism with Scala actors, I had a thought: when doing a calculation like this, am I actually making the most of my resources? If as in the Pi example, more cycles will generally lead to a better result, surely if I have a limited amount of time to get the best value I want to squeeze every last bit of juice from my hardware. It may seem a trivial question for a small problem, but this has real-world implications in domains like banking where a few more cycles in a pricing or risk calculation means a difference in income.

There’s a bit of lore that says that the optimal number of threads to perform an opertation is equal to the number of processors. Likewise, there’s a generally accepted idea that you will get a progressive deterioration in performance as more threads are run concurrently due to context-switching.

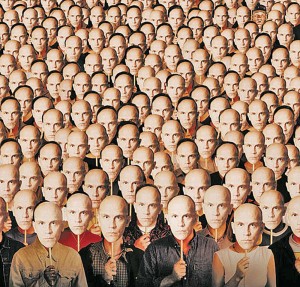

In other words, you can have too many actors.

I wasn’t convinced, and decided to try it out for myself. My thinking was that the one-thread per processor idea was a bit too clean. With processor hyperthreading, I/O overhead, JVM magic and other factors, it was pretty likely that at least (number of processors + 1) * actors would be able to run before performance degraded.

Stand back! I’m about to try Science!

So, I rewote the example to test throughput (check it out if you’re interested, but it’s more or less the same as the first, but with more jiggery-pokery), and ran it on my dual-core laptop:

1 actor (output cleaned up):

... PiEvaluator:Shutting down generators PointGenerator[1]:exiting having generated 47040000 points PiEvaluator:Shutting down aggregator Aggregator:Considered 47040000 points Aggregator:Pi is approx: 3.1415372448979593

2 actors:

... PiEvaluator:Shutting down generators Aggregator:Pi is approx: 3.141657176679491 PointGenerator[2]:exiting having generated 34000000 points PointGenerator[1]:exiting having generated 33690000 points PiEvaluator:Shutting down aggregator Aggregator:Considered 67690000 points Aggregator:Pi is approx: 3.1416520608657112

3 actors:

... PiEvaluator:Shutting down generators PointGenerator[1]:exiting having generated 20470000 points PointGenerator[2]:exiting having generated 27680000 points PointGenerator[3]:exiting having generated 20410000 points PiEvaluator:Shutting down aggregator Aggregator:Considered 68560000 points Aggregator:Pi is approx: 3.141365577596266

4 actors:

... PiEvaluator:Shutting down generators Aggregator:Pi is approx: 3.141612733060482 PointGenerator[2]:exiting having generated 27660000 points PointGenerator[1]:exiting having generated 19670000 points PointGenerator[3]:exiting having generated 18790000 points PointGenerator[4]:exiting having generated 10000 points PiEvaluator:Shutting down aggregator Aggregator:Considered 66130000 points Aggregator:Pi is approx: 3.1416107061847875

5 actors:

... PiEvaluator:Shutting down generators Aggregator:Pi is approx: 3.141523427529626 PointGenerator[2]:exiting having generated 28220000 points PointGenerator[4]:exiting having generated 10000 points PointGenerator[5]:exiting having generated 10000 points PointGenerator[1]:exiting having generated 18810000 points PointGenerator[3]:exiting having generated 18850000 points PiEvaluator:Shutting down aggregator Aggregator:Considered 65900000 points Aggregator:Pi is approx: 3.141531047040971

I ran this quite a few times to verify that I wasn’t getting inconsistent results due to GC or other one-off factors. The results were pretty consistent:

- The jump from 1 to 2 actors gave a huge boost in performance as the second processor joined the fray. Interesting to know that there wasn’t exactly a 100% performance boost, more like ~50%. There were programs, notably Spotify, running on my PC at the same time as the test, so that may account for it.

- When a third actor was introduced, there was a proportionately small, but repeatable increase in the number of calculations that could be run at the same time, equivalent to 2-3%

- beyond 3 actors, the Scala actors library itself looks to have kicked in (I may be wrong here, so please correct me if you know otherwise). The 4th and 5th actors were starved of work as opposed to being allowed to degrade the performance of the first three as was expected. It wasn’t until the first three actors started shutting down that the ones created later got to finish their first batch of work.

So, don’t take any known lore for granted. Much like anywhere else on the JVM, tuning performance is down to experimentation.

As an aside, it was interesting to note that beyond a point, my evaluation of Pi wasn’t getting any better. I put it down to the imprecision of floating-point arithmetic. Key take-away point: when programming a moon launch, don’t use doubles.

Comments

3 responses to “Goldilocks actors: not too many, not too few”

Quick question, have you tried this with the Akka actors library? Scala Actors are still rather heavyweight and it appears that Akka has improved in some respects that \gentle touch\ for these types of things. Anyways, sorry I can’t add anything more than . I’m just checking out the Lab49 website and saw a blog post on Scala.

You were about to try Science. But it’s not very scientific to allow noise like Spotify running the background and later to use this to help explain unclear results. Why didn’t you just rerun everything without such background noise?

I benchmarked an Erlang implementation of this algorithm, FYI.

Check out the results at:

http://public.iwork.com/document/?d=pi-benchmark-erlang.numbers&a=p38259035